Table Of Contents, Chapter 1

| 1-1 | Definition Of computers |

| 1-2 |

Parts of a computer 1-2-1. Central Processing Unit (CPU) 1-2-2. Random Access Memory (RAM) 1-2-3. Graphics Processing Unit (GPU) 1-2-4. Motherboard |

| 1-3 | Binary Code |

| 1-4 | Operating Systems |

| 1-5 | Open-source Community |

1-1. Definition Of Computers

In the world of technology, computers are the leading factor. Computer is an electric device, which stores and processes data, typically in binary format, according to instructions given to it. To put it simple, computers are very powerful processing devices and can be really fast. But, they are also dumb as hell (yet). They can't perform on their own and have to give instructions to them.

Those instructions, can be given to computers in various ways. Those ways are often called programming languages. There are tons of programming languages out there. Each is suited for some kind of tasks. Which one you choose is up to you. Before diving to programming languages and which one to choose, Let's learn the very low-level basics of computers.

1-2. Parts Of A Computer

A modern day computer, has 4 parts (excluding storage and power supply), CPU, RAM, GPU and the one holding all together, the Motherboard. Let's dive into each one of them with details (maybe with some history as well).

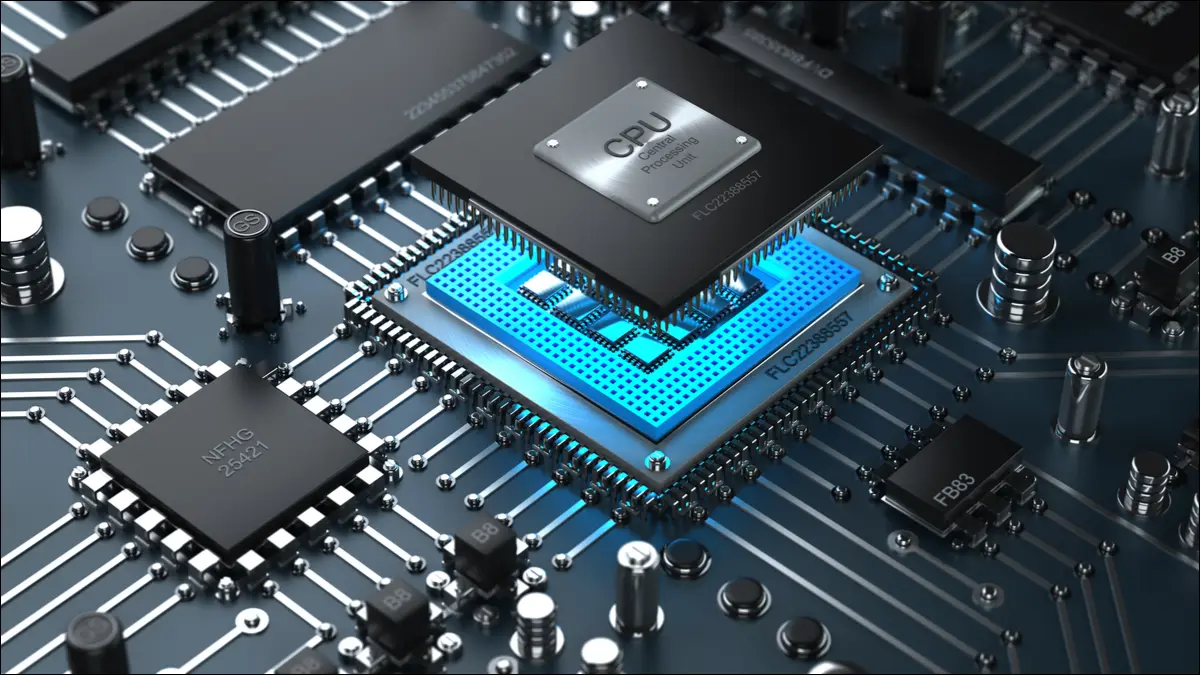

1-2-1. Central Processing Unit (CPU)

The primary and the brain of the computer, is called central processing unit or the one you always hear, the CPU. a CPUs job is to perform arithmetic, logic, and other operations to transform data input into more usable information output.

How did CPU technology advance over time?

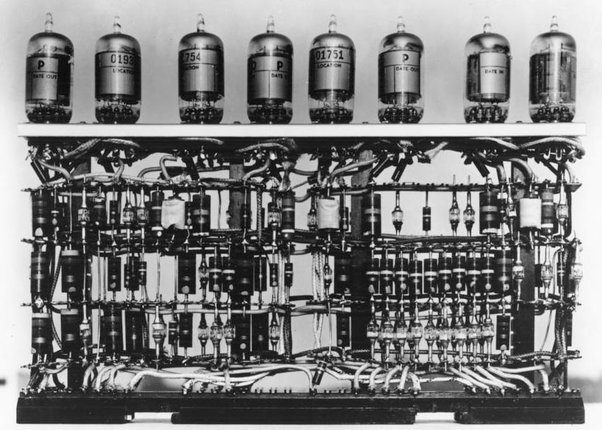

The earliest computers used vacuum tubes for processing. Machines like the ENIAC and UNIVAC were large and bulky, and they consumed a lot of power and generated significant heat. The invention of transistors in the late 1940s revolutionized CPU technology. Transistors replaced vacuum tubes, making computers smaller, more reliable, and more energy-efficient. In the mid-1960s, integrated circuits (ICs) were developed, which combined multiple transistors and other components on a single computer chip. CPUs became even smaller and faster, leading to the emergence of microprocessors.

an image of vacuum tubes

an image of vacuum tubes

a zoomed image of transistors

a zoomed image of transistors

Microprocessors

Microprocessors combined the entire CPU on a single IC chip, which made computers more accessible and led to the development of personal computers. Initially, CPUs could handle 16 to 32 bits of data at a time. In the early 2000s, 64-bit CPUs emerged, which allowed for larger memory addressing and supported more extensive data processing.

As transistor density increased, it became challenging to boost single-core CPU performance further. Instead, CPU manufacturers began using multi-core processor architectures to integrate multiple cores onto a single chip.

Checkout these CPU emulators:

Zilog Z80 (8-bit): https://floooh.github.io/visualz80remix/

ARM1: http://visual6502.org/sim/varm/armgl.html

6502: http://visual6502.org/JSSim/index.html

6800: http://visual6502.org/JSSim/expert-6800.html

Modern-day processors

With the rise of mobile devices, power efficiency has become crucial. CPU manufacturers now develop low-power and energy-efficient CPUs for modern portable devices like smartphones and tablets.

As artificial intelligence (AI) and graphics-intensive tasks became more prevalent, specialized CPUs like graphics processing units (GPUs) and AI accelerators are now used to handle the workloads.

Continued research and development in nanotechnology and materials science has paved the way for microscopic transistors and more powerful CPUs. Quantum computing and other emerging technologies hold the potential to further develop computer processor technology.

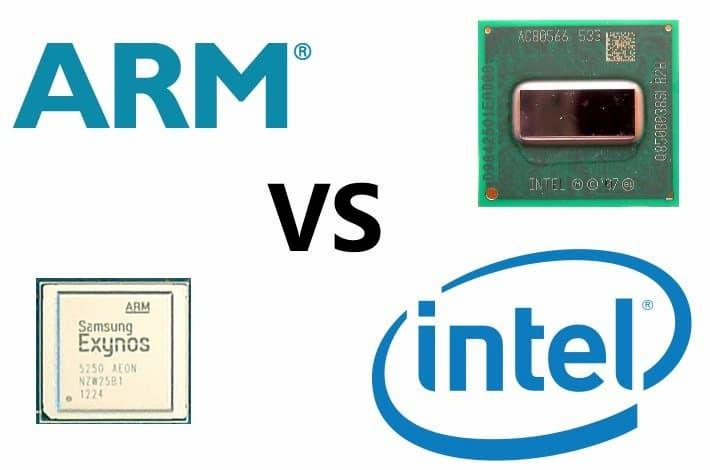

x86 and x64 vs arm (instruction sets)

x86 is a widely used computer architecture for central processing units (CPUs). It has become the dominant architecture for personal computers and servers. The name "x86" is derived from the 8086, an early processor released by Intel®. x86 CPUs use a complex instruction set computer (CISC) design, allowing them to execute multiple instructions in a single cycle. Over the years, the x86 architecture has undergone significant advancements and improvements, making it highly compatible and capable of running a vast array of software applications, contributing to its widespread adoption in the computing industry. Traditional x86 processors are evolved up to 32-bit. a 32-bit determines how much memory and data the CPU can process at a time. This is also referred as memory addressing as well. A 32-bit CPU can handle up to 4GB of memory (RAM).

On the other hand x64 (also referred as x86-64) can handle up to 64-bits of memory addressing and processing. 64-bit CPUs came in early 2000 and became popular afterwards. They are the more newer and performant version of x86 processors. Today, Almost none of the computers use x86-32bit architecture. The x86-64 is a lot more performant than the old x86. In comparison, a 64-bit computer can handle up to 16 million TB of RAM at once.

Keep in mind that since the 64-bit processors are the upgraded version of 32-bit processors, to this day, we also call them x86 processors as well (as an abbreviation of x86-64) but we also specify that they are 64-bit. Also, the 64-bit processors are backward compatible. meaning that a 64-bit processor could run 32-bit tasks.

x86 processors are tend to be performant using Complex Instruction Set Computing (CISC). On the other hand, the ARM processors, use Reduced Instruction Set Computing (RISC) to be more power efficient. Nowadays, the ARM architecture is becoming more and more popular due to it's great power efficiency. Almost every mobile device uses an ARM processor. In 2021, Apple decided to venture with ARM and took a gamble to replace it's desktop and notebook devices with their self-made ARM architecture. Which Apple succeeded.

Be vary that the x86 architecture is owned by Intel corporation. And the only one has managed to license it is Intels competitor, AMD. So these two companies are the only supplier of x86 processors. But the ARM architecture, is owned by the ARM Holdings company. The ARM company doesn't produce processors itself. It sells the license to other companies and just designs the architectures. Some of the most successful companies that are producing ARM processors are: Apple, Qualcomm, Mediatek, HiSilicon, Samsung etc.

A CPU can contain more than a single core. Each core acts as a mini brain to the whole thing. With multiple core, we can handle more complex and heavy instruction sets and use multi threading to speed things up even more. A CPU itself also has memory called caches (apart from RAMs) to store and share data between the cores. A CPU cache is a lot faster than a RAM.

The CPU clock speed refers to how many cycles per second a CPU can execute. It’s also known as the clock rate, PC frequency, or CPU frequency. This measurement is in gigahertz (GHz), which represents billions of pulses per second. Essentially, it indicates how quickly the CPU can process data—like how many individual bits it can move in a second. A higher clock speed generally means better performance in common tasks, such as gaming. However, keep in mind that other factors, like core count, instructions per clock cycle, and bus speed, also impact overall performance. And remember, CPUs can temporarily boost their clock speed for demanding tasks, but sustained high speeds require a good CPU cooler!

To briefly summarize the CPU:

What Is a CPU?

- The central processing unit (CPU) is like the brain of your computer. It’s the most important part because it processes instructions from programs, the operating system, and other components.

- Think of it as the conductor of an orchestra, coordinating all the tasks in your computer.

Instruction Set

- CPUs follow a specific set of instructions called the “instruction set.”

- Different CPUs (like Intel, AMD, or ARM) may have slightly different instruction sets.

- For example, most Windows PCs use the x86-64 instruction set.

Cores and Caches

- CPUs have multiple cores (like mini-brains) that can process instructions simultaneously.

- Cores can also use multi-threading to speed things up even more.

- They share memory called caches, which stores frequently used data for faster access.

Overall Role

- In a nutshell, the CPU transforms input data into meaningful output.

1-2-2. Random Access Memory (RAM)

What is RAM (random access memory)?

Random access memory (RAM) is the hardware in a computing device that provides temporary storage for the operating system (OS), software programs and any other data in current use so they're quickly available to the device's processor. RAM is often referred to as a computer's main memory, as opposed to the processor cache or other memory types.

Random access memory is considered part of a computer's primary memory. It is much faster to read from and write to than secondary storage, such as hard disk drives (HDDs), solid-state drives (SSDs) or optical drives. However, RAM is volatile; it retains data only as long as the computer is on. If power is lost, so is the data. When the computer is rebooted, the OS and other files must be reloaded into RAM, usually from an HDD or SSD.

How does RAM work?

The term random access, or direct access, as it applies to RAM is based on the facts that any storage location can be accessed directly via its memory address and that the access can be random. RAM is organized and controlled in a way that enables data to be stored and retrieved directly to and from specific locations. Other types of storage -- such as an HDD or CD-ROM -- can also be accessed directly and randomly, but the term random access isn't used to describe them.

RAM is similar in concept to a set of boxes organized into columns and rows, with each box holding either a 0 or a 1 (binary). Each box has a unique address that is determined by counting across the columns and down the rows. A set of RAM boxes is called an array, and each box is known as a cell.

To find a specific cell, the RAM controller sends the column and row address down a thin electrical line etched into the chip(CPU). Each row and column in a RAM array has its own address line. Any data that's read from the array is returned on a separate data line.

RAM is physically small and stored in microchips. The microchips are gathered into memory modules, which plug into slots in a computer's motherboard. A bus, or a set of electrical paths, is used to connect the motherboard slots to the processor.

RAM is also small in terms of the amount of data it can hold. A typical laptop computer might come with 8 GB or 16 GB of RAM, while a hard disk might hold 10 TB of data. A hard drive stores data on a magnetized surface that looks like a vinyl record. Alternatively, an SSD stores data in memory chips that, unlike RAM, are non-volatile. They don't require constant power and won't lose data if the power is turned off.

Types of RAM

RAM comes in two primary forms (regular RAM vs CPU cache):

- Dynamic random access memory (DRAM). DRAM is typically used for a computer's main memory. As was previously noted, it needs continuous power to retain stored data. DRAM is cheaper than SRAM and offers a higher density, but it produces more heat, consumes more power and is not as fast as SRAM.

Each DRAM cell stores a positive or negative charge held in an electrical capacitor. This data must be constantly refreshed with an electronic charge every few milliseconds to compensate for leaks from the capacitor. A transistor serves as a gate, determining whether a capacitor's value can be read or written.

- Static random access memory (SRAM). This type of RAM is typically used for the system's high speed cache, such as L1 or L2. Like DRAM, SRAM also needs constant power to hold on to data, but it doesn't need to be continually refreshed the way DRAM does. SRAM is more expensive than DRAM and has a lower density, but it produces less heat, consumes less power and offers better performance.

In SRAM, instead of a capacitor holding the charge, the transistor acts as a switch, with one position serving as 1 and the other position as 0. Static RAM requires several transistors to retain one bit of data compared to dynamic RAM, which needs only one transistor per bit. This is why SRAM chips are much larger and more expensive than an equivalent amount of DRAM.

History of RAM: RAM vs. SDRAM

RAM was originally asynchronous because the RAM microchips had a different clock speed than the computer's processor. This was a problem as processors became more powerful and RAM couldn't keep up with the processor's requests for data.

In the early 1990s, clock speeds were synchronized with the introduction of synchronous dynamic RAM, or SDRAM. By synchronizing a computer's memory with the inputs from the processor, computers were able to execute tasks faster.

However, the original single data rate SDRAM (SDR SDRAM) reached its limit quickly. Around the year 2000, double data rate SDRAM (DDR SRAM) was introduced. DDR SRAM moved data twice in a single clock cycle, at the start and the end.

Since its introduction, DDR SDRAM has continued to evolve. The second generation was called DDR2, followed by DDR3 and DDR4, and finally DDR5, the latest generation. Each generation has brought improved data throughput speeds and reduced power use. However, generations were incompatible with earlier versions because data was being handled in larger batches.

RAM vs. virtual memory (aka swap)

A computer can run short on main memory, especially when running multiple programs simultaneously. Operating systems can compensate for physical memory shortfalls by creating virtual memory.

With virtual memory, the system temporarily transfers data from RAM to secondary storage and increases the virtual address space. This is accomplished by using active memory in RAM and inactive memory in the secondary storage to form a contiguous address space that can hold an application and its data.

With virtual memory, a system can load larger programs or multiple programs running at the same time, letting each operate as if it has infinite memory without having to add more RAM. Virtual memory can handle twice as many addresses as RAM. A program's instructions and data are initially stored at virtual addresses. When the program is executed, those addresses are translated to actual memory addresses.

One downside to virtual memory is that it can cause a computer to operate slowly because data must be mapped between the virtual and physical memory. With physical memory alone, programs work directly from RAM.

How CPU And RAM Interact? A Summary

1.Loading the application:

- When you start an application on your computer, the application's executable file is loaded from the storage device (such as a hard drive or SSD) into the RAM. The RAM is used as a temporary storage space for the application and its data while it is running.

2.CPU processing instructions:

- The CPU is responsible for executing the instructions of the application. The CPU fetches these instructions from the RAM and processes them sequentially. The CPU's processing speed and efficiency determine how quickly the application can perform tasks.

3.Data transfer between CPU and RAM:

- As the CPU processes instructions, it needs to access data from the RAM for calculations and operations. Data is transferred back and forth between the CPU and RAM through the system bus, which is a communication pathway that connects the CPU, RAM, and other components of the computer.

4.Caching:

- To speed up data access, modern CPUs have built-in cache memory that stores frequently accessed data and instructions. The cache memory is faster than RAM, so the CPU can access this data more quickly, reducing the time it takes to execute instructions.

5.Virtual memory:

- If the RAM is insufficient to hold all the data and instructions needed by an application, the operating system can use virtual memory, which is a portion of the hard drive or SSD that acts as an extension of the RAM. Data is swapped between the RAM and virtual memory as needed, although this process is slower than accessing data directly from the RAM.

6.Multitasking:

- Modern operating systems allow multiple applications to run simultaneously by dividing the CPU's processing time among different tasks. The operating system manages the scheduling of tasks and ensures that each application gets a fair share of CPU time.

In summary, the CPU and RAM work together by loading and executing applications, transferring data between the CPU and RAM, utilizing cache memory for faster access, and using virtual memory when RAM is insufficient. This collaboration ensures that applications run smoothly and efficiently on a computer system.

1-2-3. Graphics Processing Unit (GPU)

What Does a GPU Do?

The graphics processing unit, or GPU, has become one of the most important types of computing technology, both for personal and business computing. Designed for parallel processing, the GPU is used in a wide range of applications, including graphics and video rendering. Although they’re best known for their capabilities in gaming, GPUs are becoming more popular for use in creative production and artificial intelligence (AI).

GPUs were originally designed to accelerate the rendering of 3D graphics. Over time, they became more flexible and programmable, enhancing their capabilities. This allowed graphics programmers to create more interesting visual effects and realistic scenes with advanced lighting and shadowing techniques. Other developers also began to tap the power of GPUs to dramatically accelerate additional workloads in high performance computing (HPC), deep learning, and more.

How does a GPU work?

Modern GPUs typically contain a number of multiprocessors. Each has a shared memory block, plus a number of processors and corresponding registers. The GPU itself has constant memory, plus device memory on the board it is housed on.

Each GPU works slightly differently depending on its purpose, the manufacturer, the specifics of the chip, and the software used for coordinating the GPU. For instance, Nvidia’s CUDA parallel processing software allows developers to specifically program the GPU with almost any general-purpose parallel processing application in mind.

GPUs can be standalone chips, known as discrete GPUs, or integrated with other computing hardware, known as integrated GPUs (iGPUs).

Discrete GPUs

Discrete GPUs exist as a chip that is fully dedicated to the task at hand. While that task has traditionally been graphics, now discrete GPUs can be used as dedicated processing for tasks like ML or complex simulation.

When used in graphics, the GPU typically resides on a graphics card that slots into a motherboard. In other tasks, the GPU may reside on a different card or slot directly onto the motherboard itself.

Integrated GPUs

In the early 2010s, we started to see a move away from discrete GPUs. Manufacturers embraced the introduction of the combined CPU and GPU on a chip, known as the iGPU. The first of these iGPUs for PC were Intel’s Celeron, Pentium, and Core lines. These remain popular across laptops and PCs.

Another type of iGPU is the system on a chip (SoC) that contains components like a CPU, GPU, memory, and networking. These are the types of chips typically found in smartphones.

What's the difference between a GPU and a CPU?

The main difference between a CPU and a GPU is their purpose in a computer system. They have different roles depending on the system. For example, they serve different purposes in a handheld gaming device, a PC, and a supercomputer with several server cabinets.

In general, the CPU handles full system control plus management and general-purpose tasks. Conversely, the GPU handles compute-intensive tasks such as video editing or machine learning.

More specifically, CPUs are optimized for performing tasks like these:

- System management

- Multitasking across different applications

- Input and output operations

- Network functions

- Control of peripheral devices

- Memory and storage system multitasking

Watch this video made by Nvidia itself on the difference between CPU and GPU: https://youtu.be/-P28LKWTzrI?si=IMStppGfrSwVJ_cD

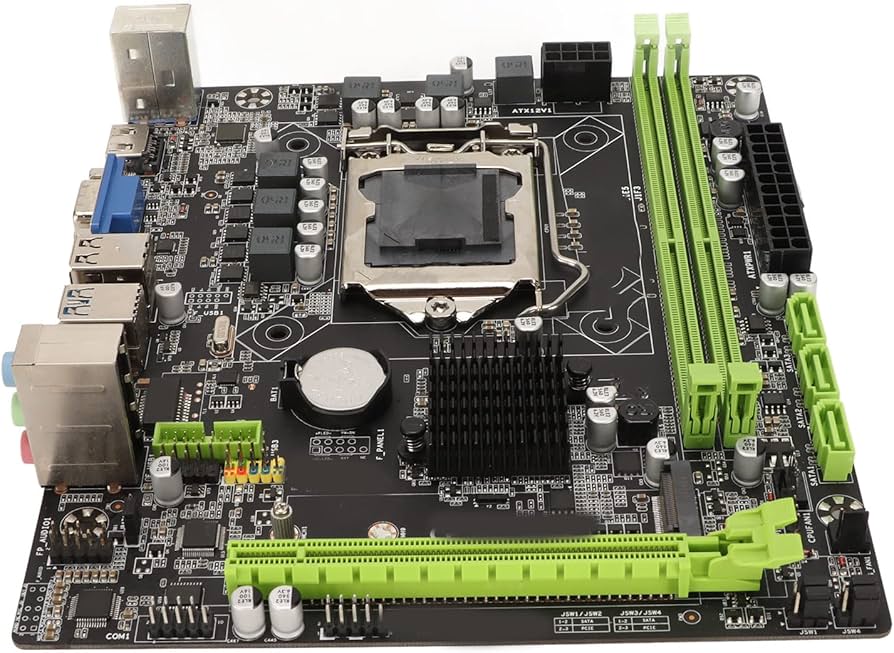

1-2-4. Motherboard

What Is a Motherboard?

A computer’s motherboard is typically the largest printed circuit board in a machine’s chassis. It distributes electricity and facilitates communication between and to the central processing unit (CPU), random access memory (RAM), and any other component of the computer’s hardware. There is a broad range of motherboards, each of which is intended to be compatible with a specific model and size of the computer.

Since different kinds of processors and memories are intended to function best with certain types of motherboards, it is difficult to find a motherboard that is compatible with every type of CPU and memory. Hard drives, on the other hand, are generally compatible with a wide variety of motherboards and may be used with most brands and types.

A computer motherboard may be found inside a computer casing, which is the point of connection for most of the computer’s elements and peripherals. When it comes to tower computers, one may look for a motherboard on either the right or left side of the tower; the circuit board is the most significant.

The earliest motherboards for personal computers included relatively fewer real components. Only a CPU and some card ports were included on the very first IBM PC motherboard. Users inserted various components, including memory and controllers for floppy drives, into the slots provided.

Compaq became the first company to utilize a motherboard that was not based on a design created by IBM. The new architecture utilized a CPU made by Intel. When Compaq’s sales began to take off, other businesses quickly followed suit, even though several companies in the industry believed it was a risky move.

But by the 1990s, Intel had a dominant share of the market for personal computer motherboards. Asus, Gigabyte Technology, and Micro-Star International (MSI) are the three most influential companies in this industry. However, Intel remains one of the ten best motherboard manufacturers in the world, even though Asus is now the largest motherboard maker on the planet.

1-3. Binary Code

We underestood how the multiple computer parts are working. But the question remained, how are they communicating? Well, the communication language for computers and every other electronic devices that we use today, is binary. Basically, binaries are 0s and 1s. Think of it like morse code. Morse codes are basically dots and dashes which can be translated into human-readable text. Binary code is somehow the same. Whereas 0 stands for off and 1 stands for on, which for example is the case about how transistors work inside of a CPU (on and off state). But unlike Morse code, Binary language has a mathematical logic. See the example below:

| decimal | binary | conversion |

| 0 | 0 | 0 ( 20 ) |

| 1 | 1 | 1 ( 20 ) |

| 2 | 10 | 1 ( 21 ) + 0 ( 20 ) |

| 3 | 11 | 1 ( 21 ) + 1 ( 20 ) |

| 4 | 100 | 1 ( 22 ) + 0 ( 21 ) + 0 ( 20 ) |

| 5 | 101 | 1 ( 22 ) + 0 ( 21 ) + 1 ( 20 ) |

| 6 | 110 | 1 ( 22 ) + 1 ( 21 ) + 0 ( 20 ) |

| 7 | 111 | 1 ( 22 ) + 1 ( 21 ) + 1 ( 20 ) |

| 8 | 1000 | 1 ( 23 ) + 0 ( 22 ) + 0 ( 21 ) + 0 ( 20 ) |

| 9 | 1001 | 1 ( 23 ) + 0 ( 22 ) + 0 ( 21 ) + 1 ( 20 ) |

| 10 | 1010 | 1 ( 23 ) + 0 ( 22 ) + 1 ( 21 ) + 0 ( 20 ) |

Decimal numerals represented by binary digits

If you want to test and try binary digits to yourself, you can look up "binary translator" or similar in a search engine. here's an example website for it: https://www.binarytranslator.com/

Evey single binary digit is called bit. for example the number 7 from the example has 3 bits (111). Every 8 bits are a single byte. bits are quantum units.

1-3. Operating Systems

What is an operating system?

An operating system (OS) is the software whichj after being initially loaded into the computer by the boot process, manages and runs other programs and services in a computer. Programs make use of the operating system by making requests for services through a defined application program interface (API). In addition, users can interact directly with the operating system through a user interface, such as a command-line interface (CLI) or a graphical UI (GUI).

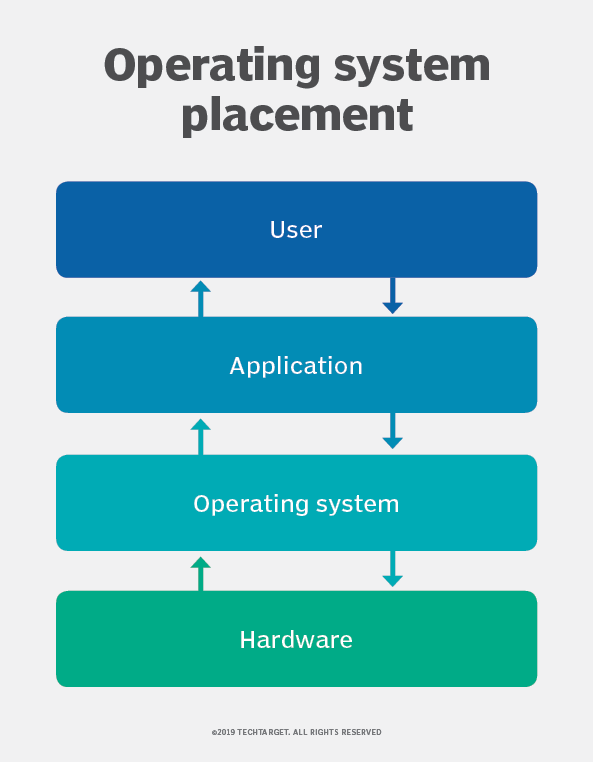

To underestand more clearly, the operating system isolates the computer resources (CPU, RAM, GPU, etc.) and manages them and acts as a bridge between the application and the computer resources.

For hardware functions such as input and output and memory allocation, the operating system acts as an intermediary between programs and the computer hardware, although the application code is usually executed directly by the hardware and frequently makes system calls to an OS function or is interrupted by it. Operating systems are found on many devices that contain a computer – from cellular phones and video game consoles to web servers and supercomputers.

In the personal computer market, as of September 2023, Microsoft Windows holds a dominant market share of around 68%. macOS by Apple Inc. is in second place (20%), and the varieties of Linux, are collectively in third place (7%). In the mobile sector (including smartphones and tablets), as of September 2023, Android's share is 68.92%, followed by Apple's iOS and iPadOS with 30.42%, and other operating systems with .66%. Linux distributions are dominant in the server and supercomputing sectors. Other specialized classes of operating systems (special-purpose operating systems), such as embedded and real-time systems, exist for many applications. Security-focused operating systems also exist. Some operating systems have low system requirements (e.g. light-weight Linux distribution). Others may have higher system requirements.

An operating system provides three essential capabilities: It offers a UI through a CLI or GUI; it launches and manages the application execution; and it identifies and exposes system hardware resources to those applications -- typically, through a standardized API.

Every operating system requires a UI, enabling users and administrators to interact with the OS in order to set up, configure and even troubleshoot the operating system and its underlying hardware. There are two primary types of UI available: CLI and GUI.

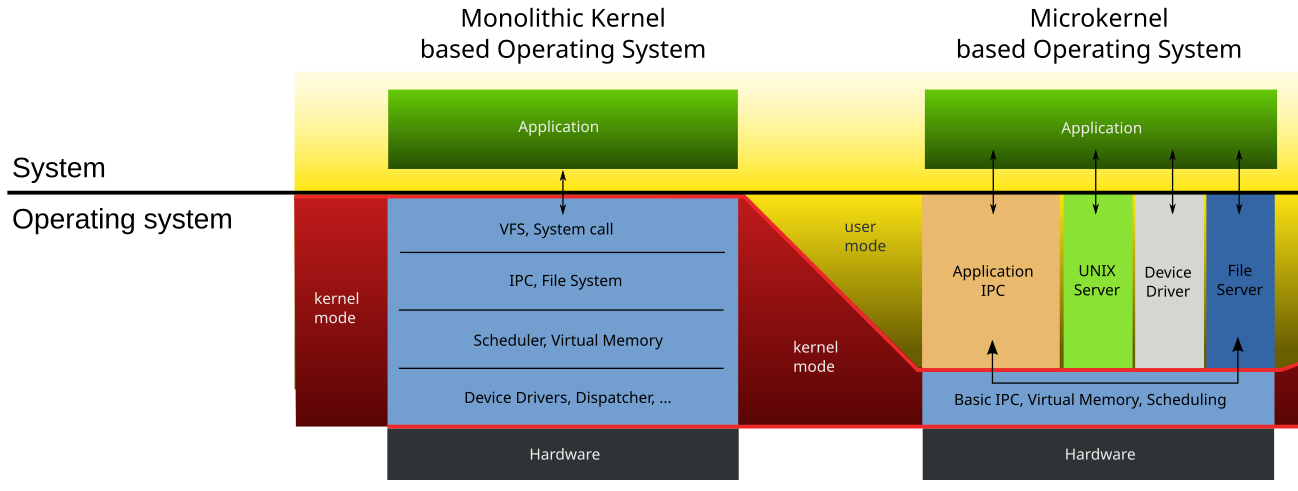

The architecture of an OS

The CLI, or terminal mode window, provides a text-based interface where users rely on the traditional keyboard to enter specific commands, parameters and arguments related to specific tasks. The GUI, or desktop, provides a visual interface based on icons and symbols where users rely on gestures delivered by human interface devices, such as touchpads, touchscreens and mouse devices.

The GUI is most frequently used by casual or end users that are primarily interested in manipulating files and applications, such as double-clicking a file icon to open the file in its default application. The CLI remains popular among advanced users and system administrators that must handle a series of highly granular and repetitive commands on a regular basis, such as creating and running scripts to set up new personal computers (PCs) for employees.

Looking into definition of an operating system

An operating system is difficult to define, but has been called "the layer of software that manages a computer's resources for its users and their applications". Operating systems include the software that is always running, called a kernel—but can include other software as well. The two other types of programs that can run on a computer are system programs—which are associated with the operating system, but may not be part of the kernel—and applications—all other software.

There are three main purposes that an operating system fulfills:

- Operating systems allocate resources between different applications, deciding when they will receive central processing unit (CPU) time or space in memory. On modern personal computers, users often want to run several applications at once. In order to ensure that one program cannot monopolize the computer's limited hardware resources, the operating system gives each application a share of the resource, either in time (CPU) or space (memory). The operating system also must isolate applications from each other to protect them from errors and security vulnerability is another application's code, but enable communications between different applications.

- Operating systems provide an interface that abstracts the details of accessing hardware details (such as physical memory) to make things easier for programmers. Virtualization also enables the operating system to mask limited hardware resources; for example, virtual memory can provide a program with the illusion of nearly unlimited memory that exceeds the computer's actual memory.

- Operating systems provide common services, such as an interface for accessing network and disk devices. This enables an application to be run on different hardware without needing to be rewritten. Which services to include in an operating system varies greatly, and this functionality makes up the great majority of code for most operating systems.

Kernel

The main thing in operating systems which it's job is to allocate resources and manage programs is called the kernel. The kernel itself is very lightweight. For example, in the process of Windows boot up, other features and applications such as login manager, file explorer, desktop, etc. will run simultaneously on the kernel in order to deliver you the full experience of an operating system.

Kernels are very lightweight. For example the Windows kernel (ntoskrnl.exe) which is in \Windows\System32 directory, has approximately a size of 5 to 10 MB. The Linux kernel, which is directly in the boot partition, is approximately 100MB. The reason for them being this lightweight, is because to ensure that the system can properly boot up with the bare minimum. And after booting up, they would call neccessary programs and services which therefore, will hug up more resources especially the memory in order to deliver usable experience to us.

Linux And Windows

Windows is a licensed operating system and its source code is inaccessible. It is designed for business owners, other commercial user and even individuals with no computer programming knowledge. It is simple and straightforward to use.

Windows offers features like,

- Multiple operating environments

- Symmetric multiprocessing

- Client-server computing

- Integrated caching

- Virtual memory

- Portability

- Extensibility

- Preemptive scheduling

The first version of Windows, known as Windows 1.0, revealed in 1985 following the formation of Microsoft. It was based upon the MS-DOS core. Following that initial launch, new versions of Windows were quickly rolled out. This included the first major update in 1987 and Windows 3.0 in the same year.

In 1995, perhaps the most widely used version yet, Windows 95 was born. At this point, it ran on a 16-bit DOS-based kernel and 32-bit userspace to enhance the user experience.

Windows hasn’t changed a whole lot in terms of core architecture since this version despite vast amounts of features that have been added to address modern computing.

On the other hand, Linux is a free and open source operating system based on Unix standards which provides a programming interface as well as user interface compatibility. It also contains many separately developed elements, free from proprietary code.

The traditional monolithic kernel is employed in the Linux kernel for performance purposes. Its modular feature allows most drivers to dynamically load and unload at run time.

Linux was created by Finnish student Linus Torvalds, who wanted to create a free operating system kernel that anyone could use. It was launched much later than Windows, in 1991. Although it still was regarded as a very bare bones operating system, without a graphical interface like Windows. With just a few lines of source code in its original release to where it stands today, containing more than 23.3 million lines of the source code, Linux has surely grown considerably.

Linux was first distributed under GNU General Public License in 1992.

Linux vs. Windows

Users

There are 3 types of users in Linux (Regular, Administrative(root) and Service users) whereas, in Windows, there are 4 types of user accounts (Administrator, Standard, Child and Guest).

Kernel

Linux uses the monolithic kernel which consumes more running space whereas Windows uses the micro-kernel which takes less space but lowers the system running efficiency than Linux. And this is why the kernel image on Linux is significantly bigger than Windows kernel image. Because the Drivers and other neccessary services are loaded with the kernel on Linux. But in Windows, kernel is isolated. Even from the drivers.

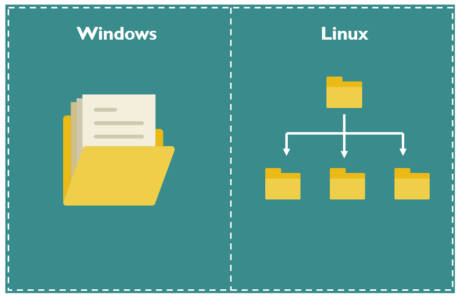

File Systems

In Microsoft Windows, files are stored in directories/folders on different data drives like C: D: E: but, in Linux, files are ordered in a tree structure starting with the root directory, further branched out to various other sub-directories. In Linux, everything is treated like a file. Directories are files, files are files, and externally connected devices (such as Printer, mouse, keyboard) are also files.

Security

Every Windows user has faced security and stability issues at some point in time. Since Windows is an extensively used OS, hackers, spammers target Windows frequently. Windows (consumer versions) were originally designed for ease-of-use on a single-user PC without a network connection and did not have security features built in. Microsoft often releases security patches through its Windows Update service. These go out once a month, although critical updates are made available at shorter intervals or when necessary.

Many a time, users of Windows OS face the BLUE SCREEN OF DEATH. This is caused due to the failure of the system to respond. Eventually, the user has to keep aside his/her frustrations and manually restart the PC.

On the other hand, Linux is based on a multi-user architecture, making it way more stable than a single-user OS like Windows. As Linux is community-driven with regular monitoring by the developers from every corner of the earth, any new problem raised can be solved within a few hours and the necessary patch can be ready for supply.

Compatibility

Windows takes the win here, Windows is the most popular dekstop operating system around the world and it has been there for a long while. It's pretty assumable that Windows is the most compatible one. Most of the applications and video games initially role out on Windows operating system. It's true that Windows takes the lead in the x86 architecture. But in arm based systems, Linux is more compatible. Because of it's vast open-source nature and mobile operating systems such as Android, the compatibily of Linux in arm grow.

Source Code

Linux is an open source operating system whereas Windows OS is commercial. Linux has access to source code and alters the code as per user need whereas Windows does not have access to the source code.

In Linux, the user has access to the source code of the kernel and alter the code according to his need. It has its own advantages. Bugs in the OS will get fixed at a rapid pace but developers may take advantage of any weakness in the OS if found.

In windows only selected members to have access to the source code.

Programming

Linux supports almost all of the major programming languages (Python, C/C++, Java, Ruby, Perl, etc.). Moreover, it portrays a vast range of applications useful for programming purposes.

The Linux terminal is far more superior to use over Window’s command line for developers. You would find many libraries natively developed for Linux. Also, a lot of programmers point out that they can do things easily using the package manager on Linux. The ability to script in different shells is also one of the most compelling reasons why programmers prefer using Linux OS.

Linux brings in native support for SSH, too. This would help you manage your servers quickly. You could include things like apt-get commands which further makes Linux a more popular choice of the programmers.

Virtualization

To put it simply, virtualization is to run multiple operating systems within one operating system. This is widely used in servers and datacenters. More information about how virtualization in datacenters work is on the next Chapter, Networks.

1-5. Open-source Community

You should've heard the term open-source in digital world. Open-source means that the source code for a software is publicly open and people can freely contribute to the software. People have the freedom to modify, delete or add to the code. Or use the open-source software inside of their own project. To give an example, Linux kernel is open-source. You can create a Linux based operating system and name it after yourself! But Windows is not open-source. It means that the source code of windows is not publicly available and only a handful of Microsoft employees work on it.

In case of a data leak in Microsoft, people still can't use the windows leaked source code to their advantage and it would end up in a legal battle with Microsoft. But as for the Microsoft Edge browser, people can build up on it and modify it because Microsoft Edge is open-source under MIT license.

Open-source projects have a lot of advantages. An open-source softwares, gives a reassuring feeling to it. when you download an open-source software, you are always sure that the software is not bloated and does not contain any ads. And also it's secure. Because people can openly see the source code and report vulnerabilities if there are any and it would get patched asap. There are a lot of great open-source softwares. You can find them in websites like Github or Gitlab.

You should've heard about Zapya or Shareit applications. and If you've used them recently, you should've experienced a lot of bloatware and unintended advertisements. Both Zapya and Shareit are owned by big corporations and because of their harsh advertisements, they are are popular. There are a lot of better open-source softwares that perform better than these two. And since they are not owned by corporations to get advertised, they stay in the shadows. A good open-source bloat and ad free to Zapya and Shareit, is LocalSend, check their Github repository: https://github.com/localsend/localsend

Reverse Engineering

Most of the programs that we use today are packaged and compiled which we are able to run them directly. Compiled programs are not readable (You can try and open any .exe file inside notepad) and are in binary notepad. The reason of seeing "nonsense" inside of the notepad, is the notepad is trying to translate binaries into meaningful outputs. And it fails because binary format is underestandable by the machine.

You must have heard about pirating softwares or video games. Well, the paid softwares and video games are usually a property of a corporation and they are not open-source. So how do crackers create keygens and unlock those softwares without accessing to the source code? Well the term reverse-engineering applies here. instead of outputting the source code to an executable file, They use the executable file to reach the source code. By reaching to the source code, They can't reverse engineer all of the package. it would take ages and almost impossible to modify anything. Instead, they would focus on creating keygens or bypassing the softwares lock. The reverse engineered source code is not a regular programming language, It's Assembly with memory addresses. We will talk about Assembly and other programming languages in upcoming chapters.

In the process of writing this blog, I had to reinstall my operating system due to some problems and lost my sources note. If any parties claim any bits of this article, reach out to me. I'll try my best to remember which sources I did use and mention them.

Sources:

https://en.wikipedia.org/wiki/Operating_system

https://www.techtarget.com/whatis/definition/operating-system-OS

https://www.britannica.com/technology/binary-code

https://www.edureka.co/blog/linux-vs-windows/

https://x.com/Mehrdadlinux/status/1807067143634145724

Gathered, written and modified by rootamin

what a root!

╞ Replylol

╞ Reply